Neural manifolds carry reactivation

of phonetic representations

during semantic processing

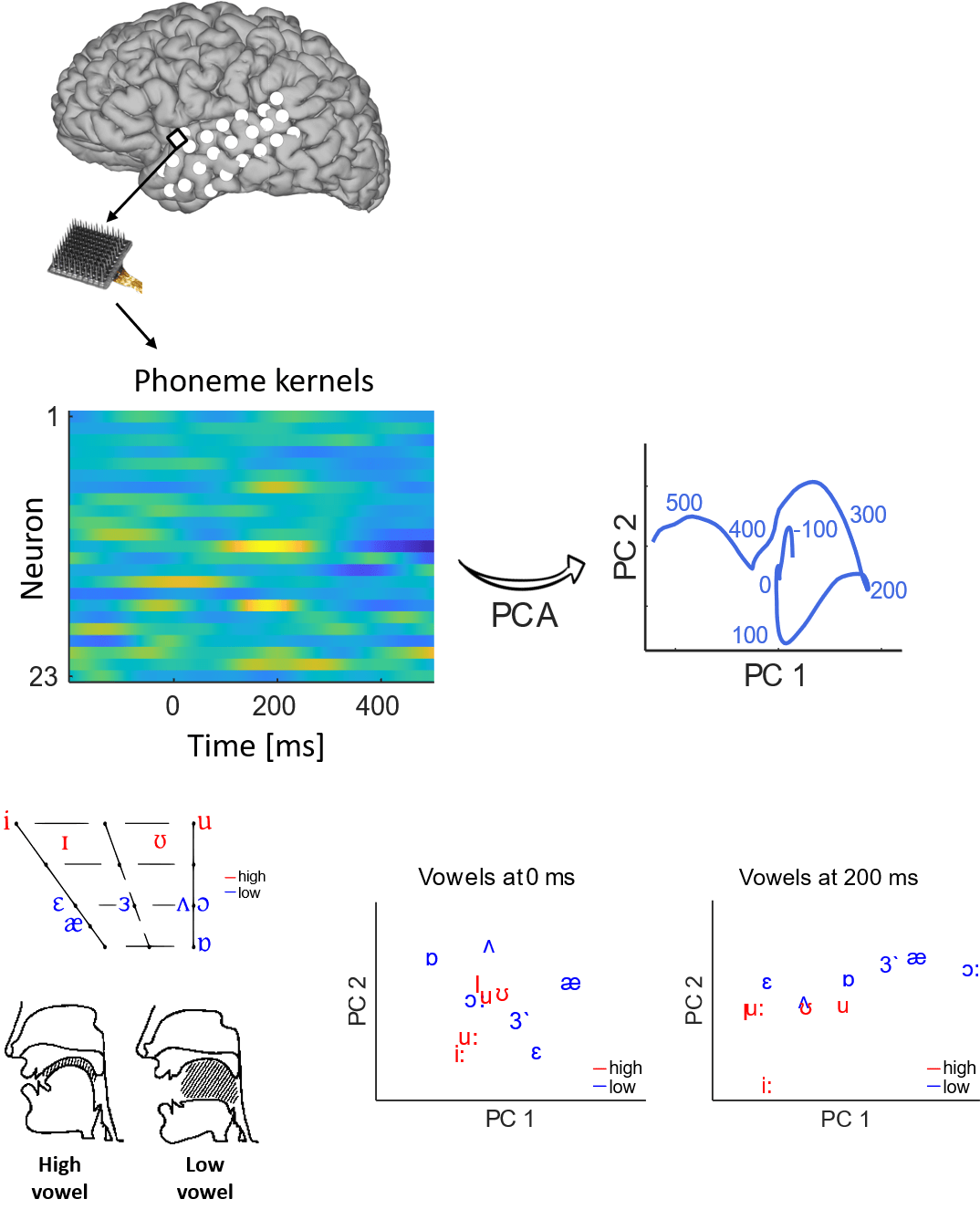

How does the brain process speech? Our study shows that the transformation of sounds into meaning is based on the collective dynamics of neuronal activities.

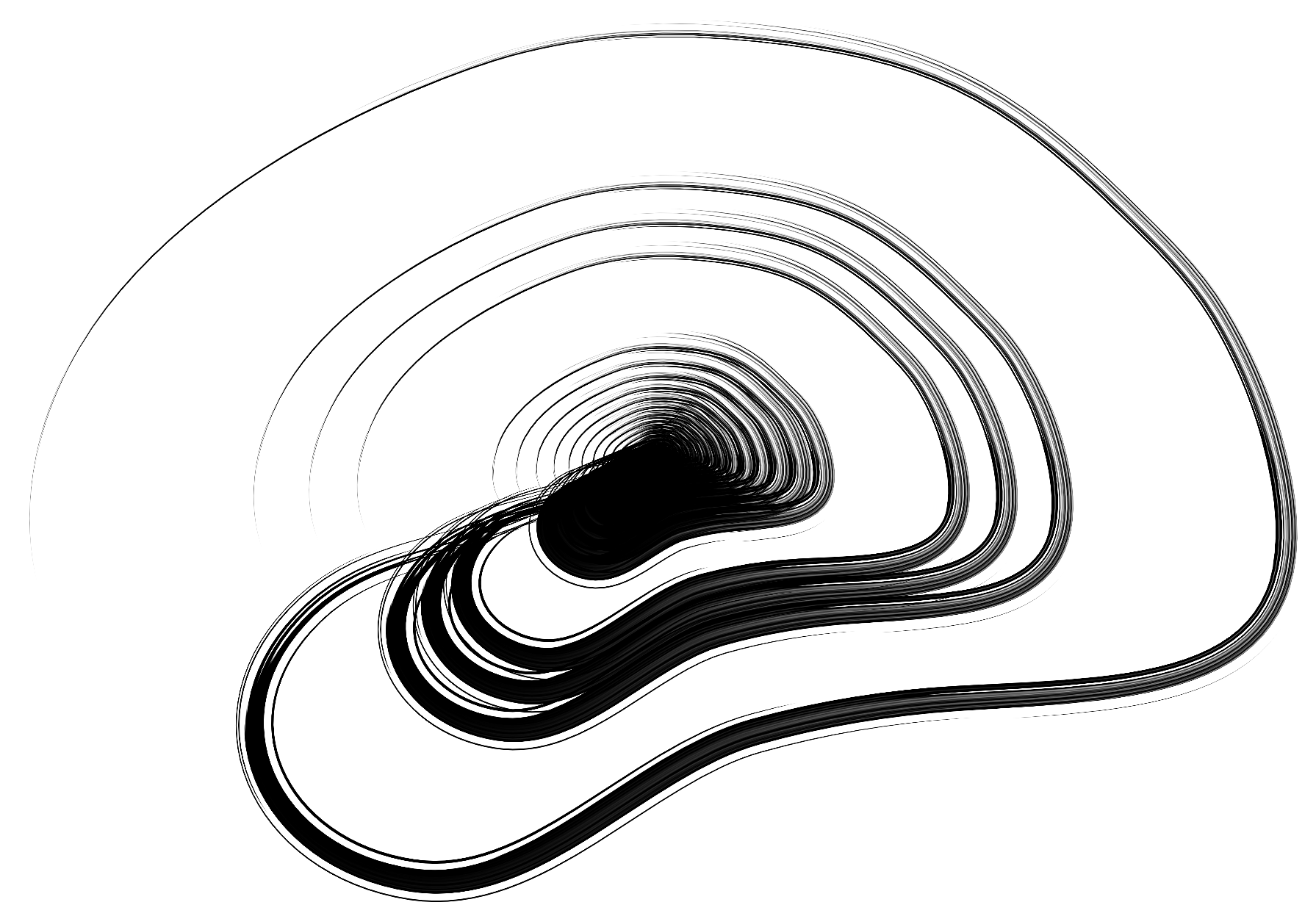

Speech comprehension involves several stages, from recognizing basic sounds to understanding the overall meaning of what is said. While researchers have studied how the brain handles different aspects of speech processing, our study focuses on how the brain transforms the representations of information as it moves from one step to the next during speech processing. Previous research on animals has shown that understanding various brain functions requires analyzing how groups of neurons work together, known as neural population dynamics. We applied this concept to the study of speech.

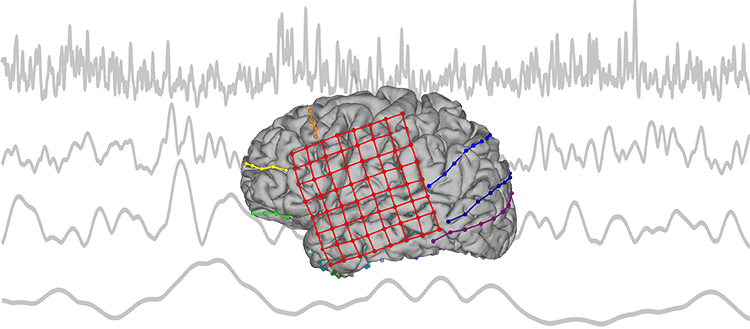

We analyzed data from one microelectrode array placed in a specific brain area involved in both understanding speech sounds and their meanings. We also recorded activity in the temporal lobe using an electrocorticography grid. Our analysis revealed how the brain’s neural populations transform speech sounds into meaningful information, both during a controlled task and during natural speech perception. We identified patterns in the neural activity that predicted both the acoustic properties of sounds and their meanings. This shows how the brain organizes information in a step-by-step manner.These findings were consistent across different tasks and specific to the dynamics of the neuronal populations we studied. Even the neural activity recorded with an electrocorticography grid located right above the microelectrode array did not record the same patterns.

In summary, our research provides insight into how the brain processes speech and supports major theories in psycholinguistics. By understanding the neural mechanisms involved in transforming speech sounds into meanings, we contribute to the broader understanding of speech processing. We also show that the concept of neural manifolds, which refers to how groups of neurons work together, proves to be a useful tool for studying speech in the brain.

Imagined speech can be decoded

from low- and cross- frequency intracranial EEG features

Reconstructing intended speech from neural activity using brain-computer interfaces holds great promises for people with severe speech production deficits. While decoding overt speech has progressed, decoding imagined speech has met limited success, mainly because the associated neural signals are weak and variable compared to overt speech, hence difficult to decode by learning algorithms. We obtained three electrocorticography datasets from 13 patients, with electrodes implanted for epilepsy evaluation, who performed overt and imagined speech production tasks. Based on recent theories of speech neural processing, we extracted consistent and specific neural features usable for future brain computer interfaces, and assessed their performance to discriminate speech items in articulatory, phonetic, and vocalic representation spaces. While high-frequency activity provided the best signal for overt speech, both low- and higher-frequency power and local cross-frequency contributed to imagined speech decoding, in particular in phonetic and vocalic, i.e. perceptual, spaces. These findings show that low-frequency power and cross-frequency dynamics contain key information for imagined speech decoding.